Complete guide to llms.txt implementation for AI-optimized websites

Now that every marketer is shifting their SEO strategy toward AEO, the implementation of llms.txt enters the game. This new trend for AI-driven strategies requires advanced technical knowledge, but it's essential for AI tools to crawl and use your content effectively.

In this article, we'll explain what it is, how it works, and how to implement it on your website to enhance your AEO strategy and take it to the next level. Let's dive in!

What is llms.txt?

llms.txt is a special text file that helps artificial intelligence better understand websites. It stands for Large Language Models.txt (in other words, AI) and, even though people state that it is a standardized format, its use and format are not officially confirmed, as it’s just a proposed standard.

This file was proposed by Jeremy Howard, creator of Answer.AI, and the idea of this came from the robots.txt file and what it does regarding ranking; however, llms.txt is a more technical and thus complicated file than robot.txt. The llms.txt file requires some advanced technical skills, so a developer can take care of it, but most marketers might find it challenging to do it themselves especially if they work with mid or big size websites.

This file is placed in your website's root directory, just like you would with the sitemap and robots.txt files, and it helps AI models to identify the most important content of your website as AI models need structured summaries and content files that are easy to interpret. Thus, you are the one who tells the AI in the file which is the important content, as you do in robots.txt regarding ranking. To do so, you use Markdown language highlighting what it’s important and helping the AI understand what you want by speaking its language.

Furthermore, adding it to your site can’t hurt, as it doesn’t affect your rankings or performance and won’t interfere with other files on your website. So, if you’re wondering whether it’s worth trying, the answer is yes. However, there are some “buts” in the equation that we will discuss later.

Does llms.txt work?

It surely does! As we've already explained, llms.txt works as a way to tell AI, in a language it understands, what the key content of your site is, what it should add to its database, and what info to use when answering users.

However, if you're unsure about adding it, it's worth noting: even though including it won't harm your site, the file can be heavy and complicated to create. We don't recommend it for medium or large websites, especially if you're not very experienced or don't have a strong dev team. The effort required can be enormous, taking a lot of time to create and implement, and you might end up with a file that does little, while that time could have been better spent improving your AEO strategy in other ways. But if you still want to add it, keep reading.

What's the difference between robots.txt and llms.txt?

They are completely different files, but one complements the other in a way. While robots.txt tells search engine bots what to crawl from your site as it dictates search engine crawling parameters, llms.txt files dictates AI what to crawl and use from your site. Both serve a similar purpose, helping crawlers read and identify content, but the crawler they are created for differ. While robots.txt is for search engine bots, llms.txt is for Large Language Models, in other words, AI tools.

Both files are upload similarly, but the other difference is the way they are created. Even though both are plain text files, robots.txt includes directives (not URLs) that tell search engine crawlers which parts of a website can or cannot be accessed. In contrast, llms.txt is designed to list important URLs from your site along with additional context—such as titles, summaries, or metadata—to help AI models identify and understand your site's most relevant content.

Here's a table summarizing the key differences between robots.txt and llms.txt:

| robots.txt | llms.txt | |

|---|---|---|

| Goal | Dictates search engine crawling parameters | Dictates AI what to crawl and use from your site |

| Crawler | Search engine bots (Google, Bing, etc.) | Large Language Models (AI tools) |

| Upload in | In the root directory of your site. | In the root directory of your site. |

| Content and format | Plain text file with directives like user-agent, block or disavow to help crawlers identified what to crawl from a website. | Plain text file written in Markdown in which URLs and additional context is included. |

Which AI use llms.txt?

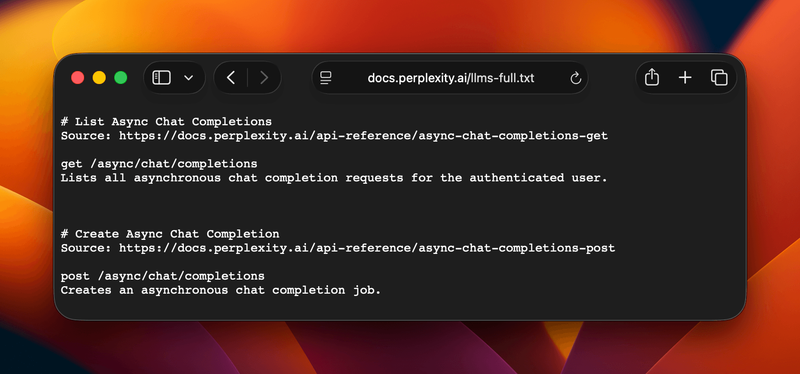

Even though no AI tool officially confirms it, llms.txt is used by nearly all AI models, or at least by the most popular ones. For example, Anthropic and Perplexity both integrate llms.txt files into their domains as you can see in the following screenshot:

Other AI tools that seem to use llms.txt files are ElevenLabs and PineCone, as users have found the files too. Even though creators say their apps don't use llms.txt files, don't let that fool you.

Does ChatGPT use llms.txt?

Regarding ChatGPT, even though the tool states that it doesn't officially use this file, when asked, it says that it can be helpful to include it because it provides structured information that could assist AI systems.

Does llms.txt improve how I appear in ChatGPT?

Yes, as we have already said, it does. In fact, the main goal of using llms.txt is to let you dictate to the AI how your content should appear and which content it should use in an understandable language for it. For example, if you have a large site with multiple pages about the same topic but want the AI to use a specific page (say, the product page), you can indicate that in the llms.txt file. If you don't express it in a way AI can easily understand, it might handle your site's information however it wants (or can).

This will also help avoid content cannibalization, as the AI will know exactly which page to prioritize for a given topic. As a result, your most important content gets highlighted, and users receive more accurate, relevant answers when interacting with AI tools like ChatGPT. Additionally, it gives you more control over how your brand and key messages are represented, rather than leaving it entirely up to the AI’s interpretation of your site.

Does Google use llms.txt?

No, Google doesn't use llms.txt in any way. According to a LinkedIn post by Kenichi Suzuki, a Product Expert at Google and one of Japan's most respected SEO specialists, Gary Illyes (Analyst on the Google Search team) stated during the Google Search Central Live Deep Dive event that the search engine does not use this type of file and has no plans to implement it, despite the existence of AI overviews on the platform.

This makes total sense, as Google already has a way of crawling websites and an algorithm that works perfectly. In fact, it works so well that some AIs even use the top Google results as their main reliable source of information to rephrase when answering users. For more on how Google interprets content semantically, see our guide on entity-based SEO.

How do I implement llms.txt?

As we have already mentioned throughout this article, llms.txt implementation is not as easy as robots.txt specially regarding the creation of it. Implementing this file will have several challenges if you have a big site or are not familiar with Markdown.

If you want guided assistance with the strategic content selection and Markdown formatting, try our free llms.txt generator tool to create an optimized file for your site. This tool simplifies the complex process by guiding you through strategic content selection while handling all the Markdown formatting automatically. Otherwise, in this section, we will teach you how to create this file manually as well as how to upload it to your site.

How to create an llms.txt file?

Creating an llms.txt file can be somewhat complicated, as it requires planning and some technical knowledge. In this guide, we’ll explain all the steps to create the file correctly.

- The content strategy. Decide what do you want AI to add to its database. Go though all your sites content (that's why we don't recommend doing this with mid-size and big sites) and choose what articles, pages, and pieces of content are worth to highlight for AI to crawl. This follows similar principles to optimizing content for SEO—you need to prioritize your most valuable pages.

- The file. Create a plain text file with whatever tool you want (Notepad, Notepad++, VSCode, etc.) and name it

llms.txt. - The content. When writing the file, use Markdown to help AI to understand what you want. The file will primarily have a similar structure to this:

- First, an H1 with the name of the project or site, which is the only required section. All other sections are optional but, if not added, AI will have less info about your project and therefore the crawling won't be as good as intended.

- Next, include a blockquote briefly summarizing the project. It should contain the crucial details needed for AI to understand the rest of the file.

- Then, you can start to add markdown sections like paragraphs, lists, etc. of any type except headings using HTML. This sections will contain more detailed information about the project and how to interpret the provided files.

- After that, you can include more Markdown sections, marked by H2 headers, containing 'file lists' of URLs for further details. In each list, every item must have a hyperlink

[name](url), optionally followed by a colon and additional notes.

Here's a more visual representation of how will look the file provided by the llms.txt site:

# Your Website Name

> Brief description of what your website is about

## Section name

- [Link Title](https://example.com/page-url): Short description of what this page contains

- [Another Link Title](https://example.com/another-page): Explain what readers will find here

- [Third Link Title](https://example.com/third-page): Brief summary of the content

## Another section

- [Resource Name](https://example.com/resource): Description of this resource

- [Tool Name](https://example.com/tool): What this tool does

- [Guide Name](https://example.com/guide): What this guide covers

## Optional section

- [Extra Link](https://example.com/extra): Additional content descriptionAnd here’s an example of a complete llms.txt file for a fictional tech blog called JuicyTech Hub:

# JuicyTech Hub 🍊

> Serving up fresh tech knowledge, one squeeze at a time

## Fresh content 🥤

- [React Hooks That Hit Different](https://example.com/react-hooks-guide): Extract maximum flavor from React's juiciest features

- [CSS Grid: No Pulp Edition](https://example.com/css-grid-clean): Smooth layouts without the messy bits

- [TypeScript Smoothie Recipe](https://example.com/typescript-basics): Blend JavaScript with types for a perfectly balanced code cocktail

## The juice bar 🍹

- [API Design That Pops](https://example.com/api-design-tips): Craft APIs so refreshing your users will come back for seconds

- [Database Squeezing 101](https://example.com/database-optimization): Get every drop of performance from your queries

- [Docker Containers: Freshly Pressed](https://example.com/docker-tutorial): Package your apps like orange juice concentrate

## Fresh tools 🧃

- [Code Juicer Pro](https://example.com/tools/code-analyzer): Squeeze out bugs before they spoil your batch

- [Performance Blender](https://example.com/tools/speed-test): Mix and measure your site's load time smoothie

- [Syntax Zester](https://example.com/tools/formatter): Add that perfect finishing touch to your codeThe first step of the instructions is crucial: if you don't know which articles or pages to include, you won't be able to create the file.

- The implementation. After writing and saving the file, the only thing left to do is uploading the file to your site.

As you can see, creating an llms.txt file may seem straightforward, but when there’s a large amount of content to include, planning becomes more challenging. Additionally, if you’re not familiar with Markdown, it will be difficult—or even impossible—for AI systems to crawl and interpret the file correctly.

Where to put llms.txt file?

As mentioned above in the article, the llms.txt file must be added in the root directory of your website. To do so, you have to follow the same steps as you did with the robots.txt file as it’s placed in the same location.

- First, you have to create the file by following the instructions we gave you here.

- As soon as you have the file, access your site’s server file manager or connect via an FTP client.

- Find the root directory. Where is this will depend on the method and platform used to upload the file, but this is often named public_html, www, or htdocs, and is the same place as your index.html or index.php file.

- Now, upload the

llms.txt. - After uploading it, it has to be accessible via

https://www.example.com/llms.txt. If you visit that URL and you can see the file, you've done it correctly!

To ensure your implementation is complete and your site is fully optimized for AI crawlers, consider running a comprehensive SEO audit to verify all technical elements are in place.

Are there any tools to help generate an llms.txt file?

Yes, there are tools that can help you generate llms.txt files for your site. We've created our own llms.txt generator that guides you through the strategic content selection process while handling the Markdown formatting for you—giving you the best of both worlds: strategic control with automated technical execution.

Other available tools include free WordPress plugins like LLMs.txt and LLMs-Full.txt Generator or LLMs.txt Generator (we're not big fans of using plugins.…), and fee-based services like Datadog.

However, it's important to understand that purely automatic generation without strategy doesn't make much sense for a file like this. The main goal of llms.txt files is for you to decide what is worth adding to AI databases, what the most important pages and pieces of content are, and for that reason it requires strategy. It won't be useful to create a file with all the info from your website and not specific instructions for AI to know how to handle all that info. That's why we recommend using a strategic approach, either with our guided tool or by doing it yourself manually, making good content-wise decisions, learning how to use Markdown, and uploading a file that is actually useful for Large Language Models.